Picture a rally in Pyongyang, North Korea. Thousands of people are waving flags and cheering while soldiers survey the crowd, looking for signs of discontent and people who are visibly faking their love for the regime. Now imagine those soldiers have cameras that can assess facial expressions, informing them with certainty who is being ingenuine.

This scenario is not improbable. While emotion detection tech is not widespread, it is far from fiction. Private companies and governments are already using it. Democracies and human rights activists need to be prepared for the implications of this technology and work to build international consensus against its use before it becomes widespread.

The next stage in invasive technology

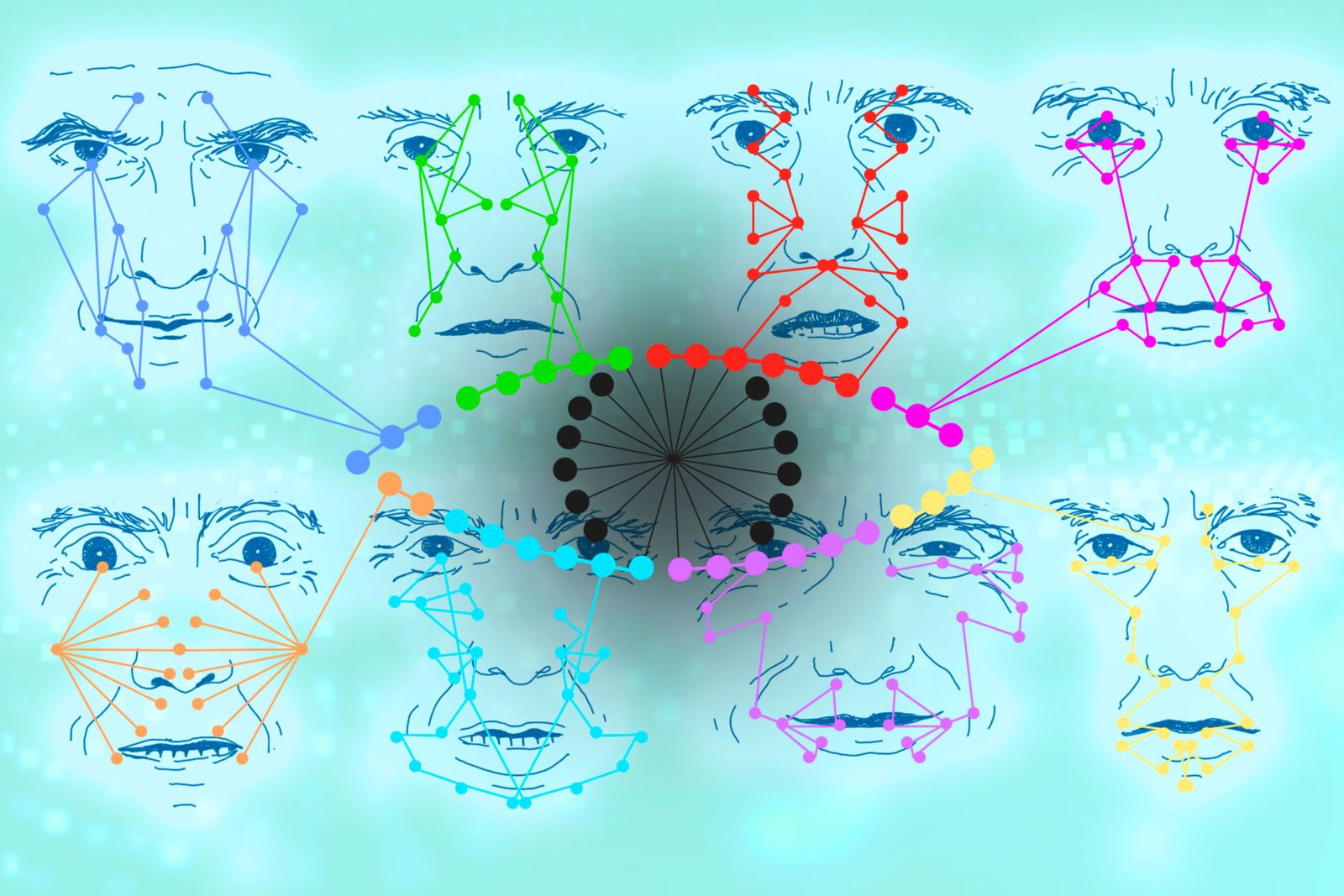

While facial recognition technology already presents challenges for human rights protection, emotion recognition software is a step further into dystopian territory. It falls under the category of affective computing, which uses data, algorithms, and artificial intelligence to recognize and influence human emotions. Like many forms of technology, its potential for societal benefit can be easily manipulated for exploitation and repression.

Something very close to the Pyongyang hypothetical is already happening in China. According to reporting from the BBC, Uyghurs in the Xinjiang Uyghur Autonomous Region are subjected to testing with this software. While the process of non-consensual testing alone is an egregious violation of people’s rights, the socio-political context in Xinjiang hints at the sinister ways this technology could be used by authoritarian regimes.

A significant driver behind the Chinese government’s repression in Xinjiang is Beijing’s desire to crackdown on any separatist beliefs among people living in the Uyghur region. Emotion detection software is built on the largely-disputed premise that people display a universal set of emotions - that there are reliable and consistent signs of genuine happiness, for example. Authoritarian actors could theoretically attempt to use emotion detection software to identify false enthusiasm in someone reciting patriotic mantras or spot an insincere denunciation of separatist feeling. It would be a coup de grâce for those seeking to fully control their populations in body and mind - if it worked.

In its present state, the technology is highly flawed, which presents further dangers. For instance, in domains like law enforcement, an imperfect reading of a defendant’s emotions could lead to inaccurate conclusions and potentially false convictions. Some experts have called for an outright ban on this form of technology in the United States for this reason, arguing that this technology threatens civil liberties by assuming facial movements and tone of voice could be used as evidence of criminality. Research professor Kate Crawford has drawn parallels between emotion detection software and polygraphs, or ‘lie detectors’, as both are deeply problematic and unreliable forms of technology that can cause serious harm when misused by authorities.

Given that the idea of using emotion recognition technology as a tool of governance is an entirely flawed premise, a ban makes sense. In a recent interview I had with Vidushi Marda and Shazeda Ahmed, authors of an Article 19 report on the topic, emotion detection is “so invasive and problematic that a complete ban on experimenting with, testing, and building these technologies is the only way to go”. Besides this, they say that the fundamental premise of the technology—that there is a “set of emotions that people should be feeling”—is so flawed and dangerous that nobody should be using it to inform decisions on governance, business, or anything else.

A brief window to act

While a good start, bans by individual democracies will not stop authoritarian governments from using, adapting, and selling emotion detection technology to other countries. Whether using facial recognition AI to profile ethnic minorities or tracking people’s movements through a compulsory phone app, governments have shown time and again that they will use technology to its fullest, most ruthless potential.

The growing use of this technology will strengthen authoritarian actors’ ability to engage in transnational repression, undermine democracies, and “reshape international norms and institutions to serve their own interests”. The longer emotion recognition technology has time to bed into key governance areas such as national security and policing, the harder it will become to turn opinion against it.

Democracies have an urgent responsibility to act. The Digital Nations (DN)—a forum of countries with leading digital and tech economies established with the aim of promoting responsible tech use among governments—is well placed to lead the fight on this issue. Although its membership is small, Digital Nations’ member states are truly global, including members in Europe, Asia, Latin America, and Oceania; this diversity would help DN build global consensus for a global moratorium on emotion detection software to prevent its proliferation.

As a smaller, nimbler multilateral grouping, DN may also be more insulated to criticisms of bureaucracy and deadlock that larger groupings like the United Nations face. Marda and Ahmed highlight that “most historic technology bans come from international agreements that certain technologies should not be pursued”. A communique declaring DN member states’ intention to ban this technology and calling on others to implement their own bans would be a strong start to turning global public opinion against emotion detection software. Such a communique should also highlight and demonstrate the sheer ineffectiveness of emotion detection as an investment - appealing to pragmatism as well as ethics.

Now is also a key moment for activists to campaign against further rollout and implementation of emotion detection software. As a technology area that is relatively under the radar of many policymakers, there is the potential for it to become a trojan horse issue that does serious damage before it is even noticed. Human rights organizations should direct their advocacy efforts toward building policymakers’ awareness of the dangers of this technology. Campaigning efforts should focus on raising public awareness, applying further pressure on politicians and governments to take strict action on emotion detection software before its use gains acceptance.

Building consensus against an uncharted technology like emotion detection will take time and will not succeed on every front. However, if democracies take a unified approach, they will ultimately ensure a norm cascade that prevents this technology from taking hold and decimating privacy, freedom of expression, and myriad other human rights. Only this proactive, precautionary approach will help get a hold on this technology before dangerous attempts to read citizen’s thoughts and feelings become standard practice in governments worldwide.